|

| Hal of 2001: A Space Odyssey |

Sounds too weird, you say? Alarmist? Silly? Read on.

Consider the following scenario:

After checking in with her primary care provider, a check of Mrs. Smith's (not her real name) diabetes revealed her blood sugar was not in the generally recommended A1c range of 6.5-7% (see page 16). She was free of low blood sugar spells and was otherwise healthy. The electronic health record (EHR) decision support protocols (like "Diabetes Wizard") prompted the care manager to OK a standard increase in Mrs. Smith's insulin dose ("Share the Care"). This was not only consistent with good clinical practice, the decision promised a pay-for-performance payday for the doc's employer and fewer costly complications for the health insurer.

Several days later, her fatal car accident was ascribed to low blood sugar. It turns out that the science of blood sugar control is still evolving, because tight diabetes control isn't necessary associated with better outcomes. In response, the Smith family lawyered-up.

The Economist article asks about the legal dilemmas inherent in robotic decision logic. Can battlefield drones be adequately programmed to protect civilians? Can driverless cars intentionally run over a dog if it means avoiding a child? Short of banning these automatons entirely, it's obvious, says The Economist, that we're going to have to develop a legal framework to deal with them:

"...laws are needed to determine whether the designer, the programmer, the manufacturer or the operator is at fault if an autonomous drone strike goes wrong or a driverless car has an accident."

The DMCB agrees and suggests that this sensible recommendation be extended to the health care arena. EHR clinical programming may only imperfectly reconcile maximum near-term safety with longer term outcomes and fail to discern the subtleties that favor one treatment over another.

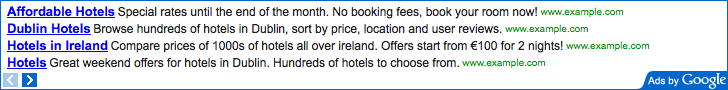

While white-coated plastic and metal cybertrons aren't taking care of diabetic Mrs. Smiths (yet), machine logic reminiscent of "Hal 9000" of 2001: A Space Odyssey already is. Instead of an unblinking red orb, there are on-screen decision-support icons. Instead of a disembodied voice, there are clinical pathways (for example, see page 8). And instead of having control over vacuum locks that exit to space, these computer entities are using logic trees to direct, prompt and recommend that doctors and their team-nurses to chose treatment "A" over treatment "B." If you think that the DMCB is being alarmist, consider that decision support, at last count, is involved in 17% of all outpatient visits in the U.S. That's a lot of legal exposure.

That's why reasonable laws are needed here too, since it's unclear how physicians, care managers, decision support programmers, EHR manufacturers (even with their notorious hold harmless clauses), physician clinics and health insurers would share in the culpability for allegedly awry decision-making. Since, in the current U.S. tort system, all could be named in a suit, the risk is arguably intolerable. Just ask any physician, whose dread of being sued has already resulted in billions of unnecessary costs.

Allowing that dysfunction to creep into computer-based clinical decision will increase costs there too, add uncertainty and slow adoption.

The Economist has it right.

Image from Wikipedia